Pulling Data with Requests

Requests, being one of the most popular Python modules, is a highly regarded tool for sending HTTP requests. In this post, we will be pulling data from HTML pages, JSON API’s, and XML API’s.

Pulling Data from HTML Pages

Requests makes downloading a webpage easy. For this example, we will pull in a list of countries from the cia.gov website. Specifically, we will use this URL: https://www.cia.gov/library/publications/the-world-factbook/rankorder/2004rank.html.

If you have not installed requests already, it can be done easily using pip.

pip install requests

Next, we can create a new Python script to import requests and setup a variable for our target URL:

import requests

url = 'https://www.cia.gov/library/publications/the-world-factbook/rankorder/2004rank.html'

response = requests.get(url)

html = response.text

print(html) # will print out the full source of the webpage

Once we use requests to fetch the HTML for us, we need some way of parsing it to get the specific data that we want. Beautiful Soup is a great library for parsing HTML. We can also import Beautiful Soup using pip:

pip install bs4

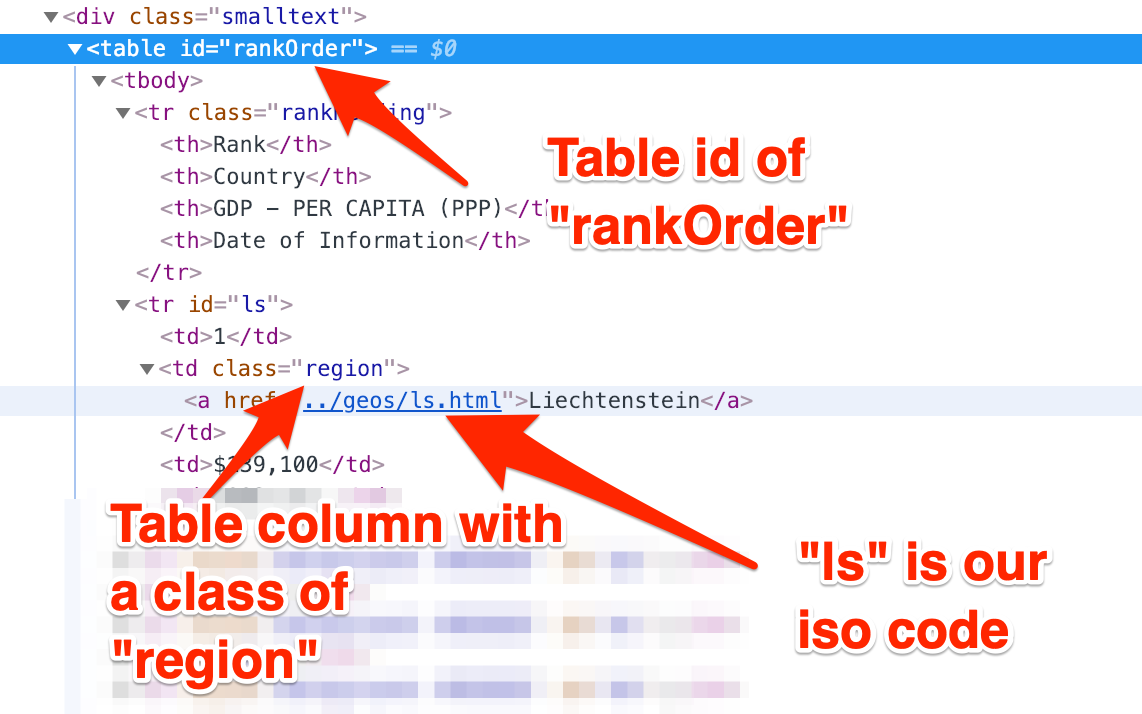

For this page, let’s grab a list of country codes. If you load our target URL using your web browser, you will see that the table has an id of “rankOrder”:

Inside each table row, there is also a column with a class of region as well as a link that contains the country code. Our next step will be to use Beautiful Soup to get all the ISO Codes. We can do this by feeding our HTML into Beautiful Soup, then find the table by it’s ID:

Inside each table row, there is also a column with a class of region as well as a link that contains the country code. Our next step will be to use Beautiful Soup to get all the ISO Codes. We can do this by feeding our HTML into Beautiful Soup, then find the table by it’s ID:

import requests

from bs4 import BeautifulSoup

url = 'https://www.cia.gov/library/publications/the-world-factbook/rankorder/2004rank.html'

response = requests.get(url)

html = response.text

soup = BeautifulSoup(html, 'html.parser')

table = soup.find(id='rankOrder')

rows = table.find_all('tr')

When installing Beautiful Soup, our module was named bs4. And, from there we can import the BeautifulSoup object. We set the response text to our html variable earlier. So, now we can feed this in when creating a new BeautifulSoup instance. We have to set a parser so it can determine what it’s parsing (html.parser in this case). There are also parsers for other types of data such as XML.

After creating a new variable called soup, we can use Beautiful Soup’s library methods to parse out different elements. We created another variable, table. From there, we created a rows variable to find all the tr elements. We can now loop through this list:

...

soup = BeautifulSoup(html, 'html.parser')

table = soup.find(id='rankOrder')

rows = table.find_all('tr')

for row in rows:

target_column = row.find_all('td', 'region')

As we loop through the rows, we can search for the column having an id of region. We created a new variable, target_column. Before attempting to use this variable, we should apply a guard to make sure it actually found the column. There may be instance where it doesn’t exist (i.e. the header row).

...

soup = BeautifulSoup(html, 'html.parser')

table = soup.find(id='rankOrder')

rows = table.find_all('tr')

for row in rows:

target_column = row.find_all('td', 'region')

if target_column:

pass # find the anchor tag so we can parse the isocode

In order to get the ISO Code, we will need to find the link, or a tag. We can parse the code from the href attribute:

...

for row in rows:

target_column = row.find_all('td', 'region')

if target_column:

link = target_column[0].find('a')

href = link['href']

idx_html = href.index('.html')

country_code = href[idx_html-2:idx_html]

print('country_code: {}'.format(country_code))

Running the code above should display a list of ISO Codes for each country.

Using Beautiful Soup alongside Requests is a common approach to scraping html content.

Pulling Data from JSON API’s

JSON API’s are probably the most common way of pulling data. JSON is much easier to parse and make use of downloaded data. Similar to the previous example, we will pull a list of countries and parse the ISO Codes. Except, this will be far less work. The World Bank has an API for countries and we will use the following URL: http://api.worldbank.org/countries?format=json.

Similar to the HTML example, we need to import requests and send a GET request to our target URL:

import requests

url = 'http://api.worldbank.org/countries?format=json'

response = requests.get(url)

From here, yes, we could use response.text and import the built-in json module to dump the data into a variable. However, requests has a built-in JSON decoder we can use simply by adding a method:

import requests

url = 'http://api.worldbank.org/countries?format=json'

response = requests.get(url)

# built-in JSON decoder

country_data = response.json()

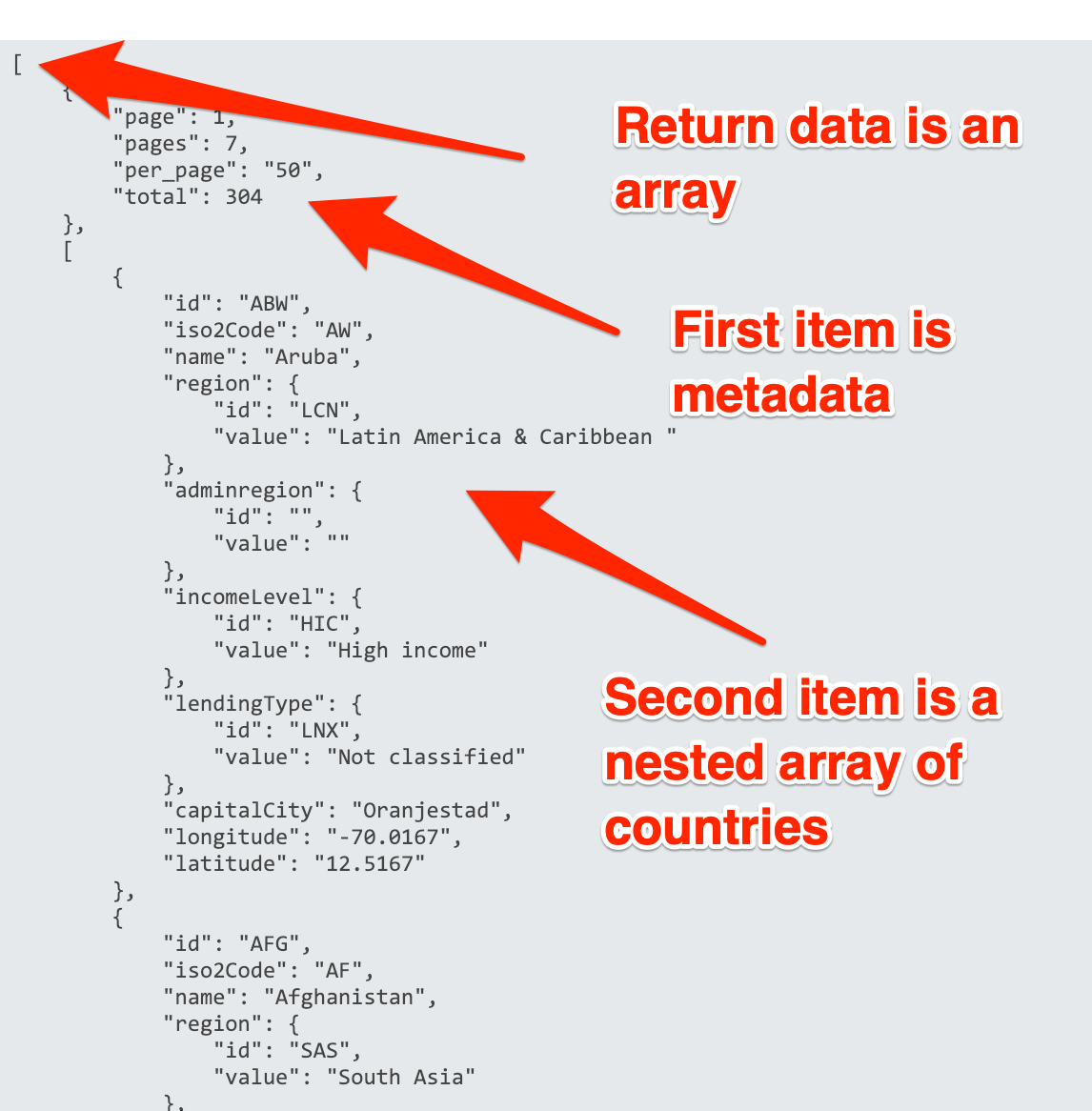

If we inspect the data we’re getting back, it’s going to be an array. The first item in the array is meta data. The second item is another array containing the list of countries which is what we’re interested in:

import requests

url = 'http://api.worldbank.org/countries?format=json'

response = requests.get(url)

# built-in JSON decoder

country_data = response.json()

countries = country_data[1]

Above: since were only interested in the second item in the JSON array, we can set a new variable, countries. Since the Requests JSON decoder conveniently converted this to a list for us, we need to use an index of 1 to get the second item in the list.

From here, we can loop through the list of countries and find the ISO Codes.

import requests

url = 'http://api.worldbank.org/countries?format=json'

response = requests.get(url)

# built-in JSON decoder

country_data = response.json()

countries = country_data[1]

for country in countries:

print('country: {} {}'.format(country['name'], country['iso2Code']))

Pulling Data from XML API’s

In this previous example we use the World Bank countries API to pull a list of countries. Recall that we had appended a query parameter (?format=json) to the end of the URL so the API would return our result back in JSON format. By default, World Bank will return data in XML format.

Getting our script setup will be the same as the previous example, except we won’t need any query params in our URL.

import requests

url = 'http://api.worldbank.org/countries'

response = requests.get(url)

The next step will be to parse the XML to actually be able to use the data. While we can probably use Beautiful Soup again, Python has a built-in XML module that can help us with this. The ElementTree object has a fromstring method that will take our response text and convert it to an XML Element object.

import requests

import xml.etree.ElementTree as ET

url = 'http://api.worldbank.org/countries'

response = requests.get(url)

country_data = response.text

countries = ET.fromstring(country_data)

print(countries) # XML Element Object

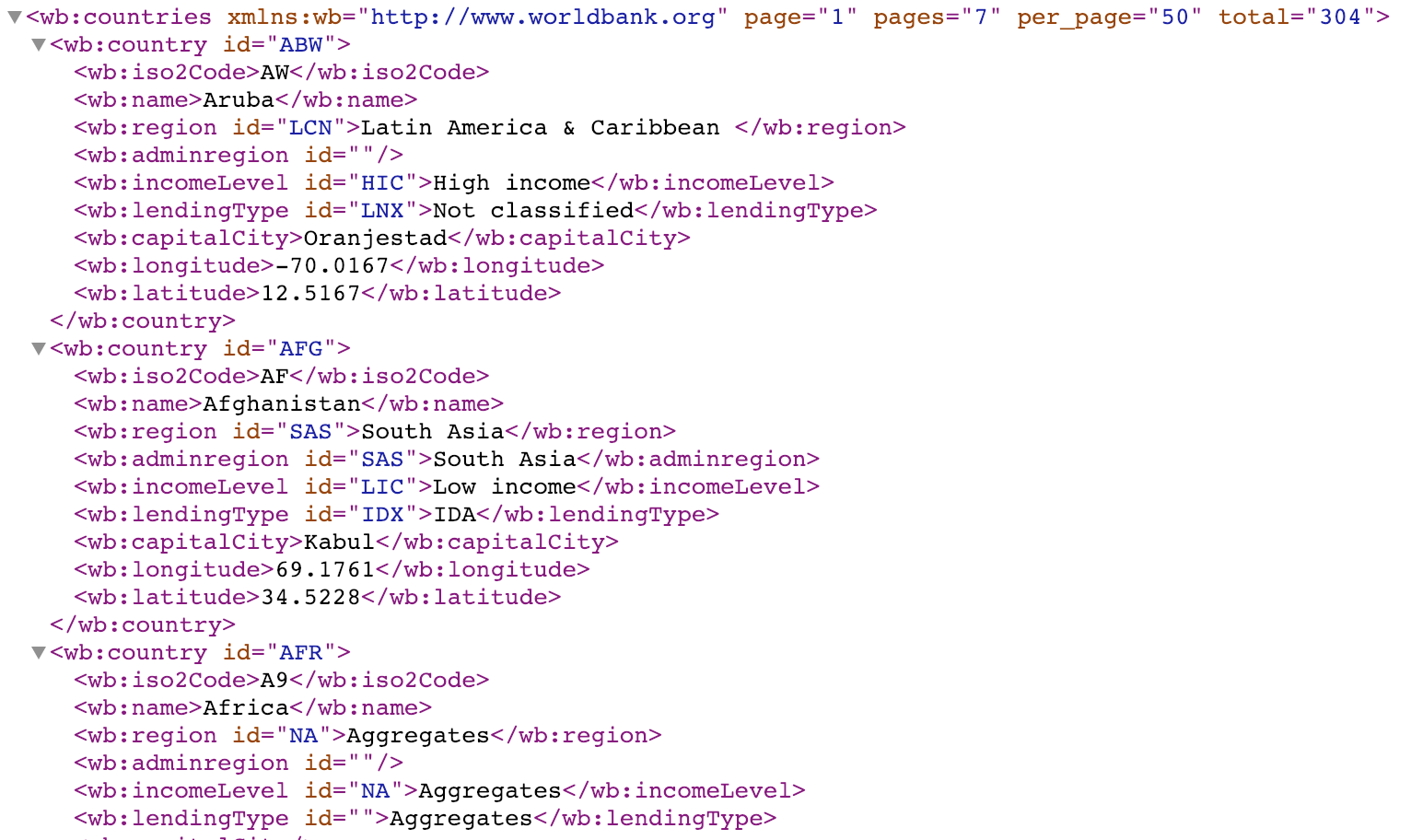

Our XML element object contains a countries element in which all the individual country elements are nested:

Notice also: there is a “wb” namespace for each element. In order to parse the data we want from this, we will have to pass a namespaces dictionary when parsing the elements:

Notice also: there is a “wb” namespace for each element. In order to parse the data we want from this, we will have to pass a namespaces dictionary when parsing the elements:

import requests

import xml.etree.ElementTree as ET

url = 'http://api.worldbank.org/countries'

response = requests.get(url)

country_data = response.text

countries = ET.fromstring(country_data)

print(countries)

namespaces = {'wb': 'http://www.worldbank.org'}

for country in countries.findall('wb:country', namespaces):

# each country will be an XML Element Object

print('country: {}'.format(country))

Since each nested country element will also have a “wb” namespace, we will need to pass the namespaces dictionary into each method we use to find the country data we need:

import requests

import xml.etree.ElementTree as ET

url = 'http://api.worldbank.org/countries'

response = requests.get(url)

country_data = response.text

countries = ET.fromstring(country_data)

print(countries)

namespaces = {'wb': 'http://www.worldbank.org'}

for country in countries.findall('wb:country', namespaces):

name = country.find('wb:name', namespaces).text

code = country.find('wb:iso2Code', namespaces).text

print('country: {} - {}'.format(name, code))

And now, we have successfully pulled data through HTML web pages, JSON API’s, and XML API’s. Python and Requests make this quick and easy for us. Along with some added help with libraries like Beautiful Soup.

Posted in python